With the rising adoption of machine learning algorithms, marketers are more and more using algorithms to predict consumer behaviour and thereby more accurately target ads towards specific individuals. Machine learning can be used to uncover details such as sex, religion, age, political beliefs and other personal information without the user having to reveal that information themselves. This, of course, does bring up privacy concerns.

How concerned about our privacy should we be?

In 2012, there was an infamous story about a teen who was sent coupons for baby items by the US retailer, Target. The company had developed an algorithm that would send targeted ads to customers. The algorithm would take in information about customers’ habits and individualise ads and promotions for them. The algorithm knew what items pregnant customers browse and at what point during their pregnancy they buy certain products. Using this information, Target’s marketers were using the algorithm to identify other pregnant moms and send them coupons for baby items.

The angry father called Target to complain that his daughter was receiving those coupons when she was just a child and could not be pregnant. Target apologised. A few days later though, the father called to apologise as it had turned out his daughter was indeed pregnant. At the time that the story broke, it was spun in a way that made the algorithm seem omniscient. However, the algorithm did not predict something that was unknown to everyone. The daughter knew, and she was preparing. It is likely that her browsing and shopping habits alerted the algorithm to a possible pregnant customer. Additionally, one anecdote does not speak to the algorithm’s accuracy. How many pregnant teens did not receive coupons? Confirmation bias will lead us to only focus on the times when the algorithm was proven to be correct and ignore the many times it was probably wrong.

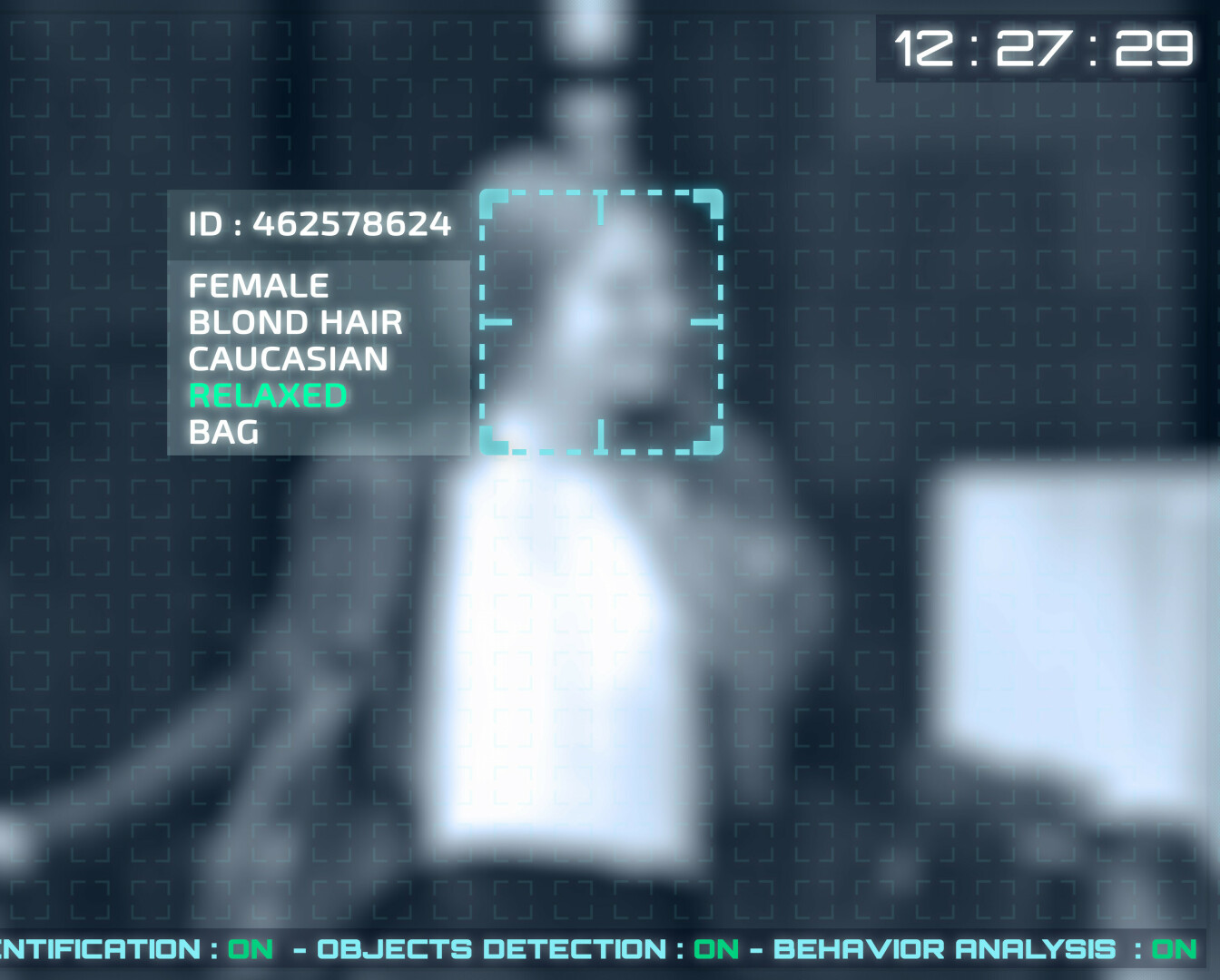

With more and more people wanting personalization, there is an increased focus on gaining information about consumers, information that can then be used by algorithms to create targeting advertising. Microsoft had previously used the X Box’s Kinect camera to obtain physical information about their players which caused concern. Facial recognition can then further be used to target ads based on sex and age. And while consumers appreciate a more personalized experience, receiving a message congratulating them on their pregnancy when they haven’t said anything about that is sure to raise concern.

How to make targeted advertising more acceptable

The less consumers feel like they are being spied on, the more comfortable they will feel. Some have suggested that targeted ads should feel random. For example, in between ads for baby items there should be non-baby related ads. This will make consumers feel less targeted while still receiving the targeted ads. Some may find this to be an ethical issue in the same way cookies and terms and conditions are ignored. The responsibility for the consumers to acknowledge that their data is being used is shadowed by the fact that less than 10% of people actually pay attention to them. In this case, their data is now unknowingly being used.

An often recommended strategy is to educate people about data and privacy. Much less teen pregnancies occur in places where sex education is implemented. In the same way, education about data can lead people to being more mindful about how their information is being used and can thus make more informed choices.

The other issue with obtaining large amounts of data from people and predicting new data about them is that all this information is vulnerable to security breaches. Digital security is a major concern when talking about personal data. It has been suggested that sensitive information should be automatically redacted from databases and thus not fed into a machine learning algorithm. Marketers should beware to not include dangerous information such as social security numbers in their algorithms, especially when it is not needed.

Another idea is to implement targeted cohort advertisement such as Google’s FLoC. In this way, it is hoped that data cannot be directly linked to you. With it, consumers are placed into cohorts; instead of ads being targeted directly, they are targeted to cohorts who share similar traits.

After all of this, even if privacy laws are followed to the letter, targeted advertising created by algorithms introduces an aspect of unpredictability, especially when it is used to predict consumer behaviours from things that we otherwise wouldn’t have ever thought to link together. This makes some people a bit uncomfortable while others are content to roll with an “out of mind, out of sight” mentality. Regardless, algorithms are revolutionising targeted advertising, but they also raise important privacy concerns. As advertisers ourselves, who use advertising platforms to promote our software product, we’ll be closely following the evolution of ad-targeting algorithms and how advertisers tackle the issues that they raise.